On the 31st of January, a member of staff at the popular Git provider Gitlab, accidentally ran a command on their production database server.

The fateful command was: rm -Rvf.

Any readers with Linux experience will know that this essentially means “delete everything, and don’t check if we mean it”.

Now this alone would have been an extremely damaging event for Gitlab. It would have meant several hours of downtime for a website, which many developers rely on as a place to store and share their code. However it soon became apparent that things were worse than they seemed.

Gitlab went public

Gitlab have a publicly available document in which they discussed the incident and how to recover from this disaster. It makes interesting reading and can be found here. (My utmost respect goes out to Gitlab for doing that publicly, many organisations would have hidden behind PR and kept quiet until after the fact!)

As you can see from the document, Gitlab, the second biggest provider of hosted Git services in the world, had 5 different backup systems in place.

None of them were working.

This is more than a little surprising, given the nature of Gitlab’s service. People rely on Gitlab as a “safe place” to store their code, and rightly or wrongly, would likely assume that a cloud based service could be relied upon to look after their hard work.

How we prevent data loss

As a man who has been the victim of data loss, I’m really rather paranoid about backing up my data, both personally and professionally, and that extends to the many servers that I manage here at Laser Red.

All of our VM based hosting is backed up to a secure location daily, and snapshotted regularly. This means that should the worst happen, we have a fallback, and we check that it works, regularly.

What can we learn?

So what can we learn from this embarrassing incident? Well several things:

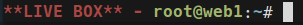

- Ensure that it is very clear to anyone connected to a production server, that they are connected to a production server. We tend to use the prompt to make this obvious:

- If you’re running

rm -Rvfon a live production server, you probably shouldn’t be. - If you have backup systems in place, do not assume they’re working. Make it somebody’s responsibility to check they’re working, every day.

- When you have hundreds of thousands of users, don’t have a single point of failure!

The last point is the one that concerns me most. And it makes me wonder how many other cloud based services that people rely on, are one 7 character command away from complete disaster.

We can help!

If you would like to know more about our secure hosting, and how we can help, drop us a line. With our 99% uptime guarantee, you could say we are pretty confident when it comes to website hosting!